Sentiment Analysis using NLTK NB Classifier

Sentiment Analysis is one of the most prominent application of Natural Language Processing, as it helps us understand the public opinion on a given topic. This insight can be used by companies to identify possible issues and resolve them to improve customer experience. In today’s blogpost we are going to implement a simple Naive Bayes Classifier using NLTK library to analyze tweets.

We are going to use twitter samples corpus, which contains 5000 positive and negative labelled tweets. Let us first import the necessary libraries to process these tweets. We will need to first tokenize each tweet and remove the stopwords as they do not contribute much to the meaning or sentiment of the sentence. We will also be lemmatizing the words present in the sentence, so that we can focus on the meaning of the word rather than the form it occurs in.

import nltk

from nltk.corpus import twitter_samples,stopwords

from nltk.tokenize import word_tokenize

import string

import validators

import numpy as np

from nltk.stem import WordNetLemmatizer

nltk.download('wordnet')

nltk.download('twitter_samples')

nltk.download('stopwords')

WL = WordNetLemmatizer()

Let us now get the dataset we are going to work on. We will be importing the tweets from ‘twitter samples’ and also assign the labels of 0 and 1 for negative and positive tweets respectively.

all_positive_tweets = twitter_samples.strings('positive_tweets.json')

all_negative_tweets = twitter_samples.strings('negative_tweets.json')

tweets = all_positive_tweets + all_negative_tweets

labels = np.append(np.ones((len(all_positive_tweets),1)), np.zeros((len(all_negative_tweets),1)), axis = 0)

print(labels.shape)

We will now define a function Proccess_Tweet to process the tweets in our dataset. After tokenizing, we will be removing the stop words, URLs etc. so that we can focus on the important words present in the sentence.

def Process_Tweet(tweet):

tweet = tweet.replace("n't"," not").replace("'m"," am").replace("'ve"," have").replace("’","'").replace('`','')

tweet = tweet.split()

alphabets = "qwertyuioplkjhgfdsazxcvbnm"

stop_words = set(stopwords.words('english'))

tweet = [w.lower() for w in tweet if w not in stop_words and w[0] not in string.punctuation and not validators.url(w) and w[0] in alphabets]

tweet = word_tokenize(" ".join(tweet))

tweet = ["<s>"]+[WL.lemmatize(w) for w in tweet if w not in string.punctuation] +["<\s>"]

out = []

return tweet

Let us now process each tweet present in our dataset and split them as training and testing set. Along with this we will create a vocabulary set which will contain the unique words present.

from sklearn.model_selection import train_test_split

processsed_i = []

X = []

Y = []

vocab = set()

for i in range(len(tweets)):

processsed_i = Process_Tweet(tweets[i])

print("\rTweets",str(i+1),":",processsed_i,end="")

if processsed_i != []:

vocab.update(processsed_i)

if labels[i]==1:

Y.append(1)

else:

Y.append(0)

X.append(processsed_i)

print("\nNumber of tweets:", len(X),len(Y))

vocab = list(vocab)

print("\nVocab Size:",len(vocab))

x_train,x_test,y_train,y_test = train_test_split(X,Y,test_size=0.2,shuffle=True)

The feature of our Naive Bayes model is going to contain what words from our vocabulary are present or absent in the given tweet. For this we are going to make a base dictionary which will have all words as False, while we deal with the tweets, we will make the words present in that tweet alone as True. In the below code we are going to make the base dictionary.

vocab_dict = {}

counter = 0

for i in list(vocab):

vocab_dict[i] = False

print("\rCounter:",counter,end = "")

counter+=1

Next up we will format our training and testing data as required by the NLTK NB Classifier. For this we are going to create the feature dictionary for each tweet, with only the words that are present in Tweet as True and rest as False.

from nltk import classify

from nltk import NaiveBayesClassifier

train_data = []

test_data = []

print(len(y_train))

for i,j in zip(x_train,y_train):

ins_dict = vocab_dict.copy()

for w in i:

if w in ins_dict.keys():

ins_dict[w] = True

train_data.append((ins_dict,j))

for i,j in zip(x_test,y_test):

ins_dict = vocab_dict.copy()

for w in i:

if w in ins_dict.keys():

ins_dict[w] = True

test_data.append((ins_dict,j))

print(train_data[:10])

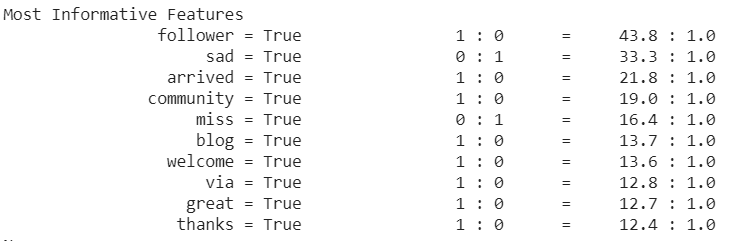

Finally, we will train our classifier using the training dataset. The training will take a few minutes and once done we can test its performance on our testing data. We can also retrieve the most informative features from our classifier as shown in Figure 1.

classifier = NaiveBayesClassifier.train(train_data)

print("Accuracy is:", classify.accuracy(classifier, test_data))

print(classifier.show_most_informative_features(10))

Drawbacks

You will notice that the accuracy on testing data is around 70%, which while decent is not great. There can be various reasons for the low accuracy with some of them listed below:

- Lack of information regarding the order in which the words appear.

- The meaning of the word is not understood but rather their appearance in positive or negative tweets is taken as the only parameter.

- Only basic processing of the tweet is done and therefore many important information is lost.

You can find the code for this blogpost in this github repo.