NLP: Uniqueness of Languages

Languages have played a crucial role in defining human history. It isn’t hard to see the correlation between languages and cultures, and it thus learning a language isn’t much different from learning a new way of life. NLP deals with precisely this, learning features and nature of languages. However, it is necessary that we remember to uniqueness of each language and therefore the same methods cannot be used across different languages.

Often when we mention the term Natural Language Processing, we assume that we are talking about English processing system, and is often the case. The reason for this is simple, there wasn’t enough well structured datasets available. Furthermore most tools that we use today are developed with English in mind. One of the most basic steps in NLP pipeline is Tokenizing and Lemmatization (or Stemming). While it is relative easy to do the same in English (thanks to wide range of tools available), it is not so in other languages, but before we get into it, we need to realize the need for it.

Social Disadvantages and Reinforced Bias

We often forget that technology is only available to those who can understand it. An application made solely in English is only accessible to the English speaking population. However, we must remember that the majority of the world does not speak English or adjacent languages, and therefore are at a disadvantage. Similarly, enabling NLP for only English adjacent languages, comes with it’s real world consequences, disabling people (and consumers) who aren’t familiar with it.

Further we must remember that language often brings forward the culture it is associated with. When we restrict our ML models to just one language, it along with the language picks up the biases and norms of that culture and literature. This makes our model specific to that region and often cannot be used in others due to different standards.

Uniqueness of Languages

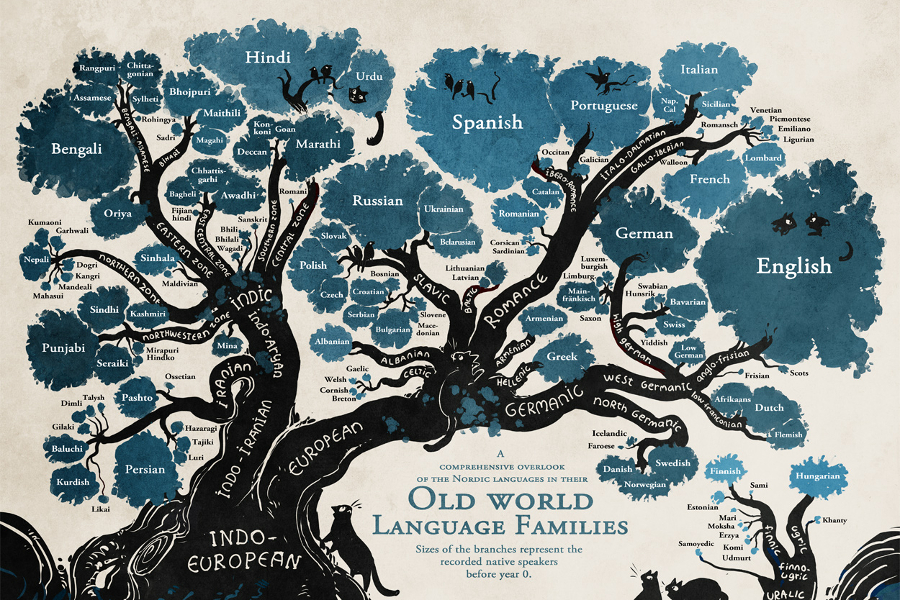

While we can clearly see the need for NLP in various languages, implementing them does have their own constraints. Let us consider a few indic languages to further analyze these constraints. Many indic languages (like Hindi, Sanskrit etc.) belong to the same language family as English and we can notice the phoenetic link between various words but the writing style and grammar has diverged significantly. Likewise, southern languages have similarities between them and also noticeable differences, which need to be accounted for while developing a NLP system.

Let us consider one of the most prominent difference between English and Indic languages, which is that in Indic languages use a lot more compound words, which are formed by combination of two or more stem words. This poses an interesting problem as regular stemming of words is not sufficient as we need to identify the various stems that make the word to fully understand its meaning.

Another example where the language has a noticeable difference from English is Japanese, where unlike English there are no gaps between words. This creates an interesting problem where tokenization of the sentence cannot be easily done and we must first perform Segmentation to identify the various meaning words from the sentence before processing it.

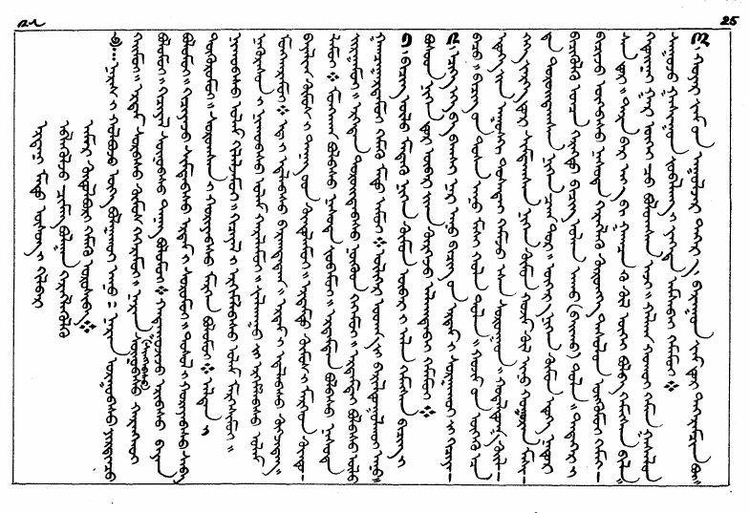

Another interesting problem is presented by languages like Hebrew, Arabic and Urdu, which unlike most European languages follow to Right-to-Left script, where the writing starts from right of the page and goes to the left. Mongolian on the other hand is written top to bottom, which goes completely against what we presume as normal.

Conclusion

Languages is certainly the greatest invention humankind came up with, as it not only helps us communicate with each other, but also is a means to understand human history and cultures. Restricting ourselves to a few languages blind AI from understanding the complexity of various cultures and regions. Therefore it becomes a necessity to develop models and corpora in various languages to enable machines that satisfy the needs of a broader scope of humanity. In the next week’s blogpost I will be going through the same, highlighting a few researches and tools that enable NLP in the context of Indic Languages.