OCR using PyTesseract

Optical Character Recognition, abbreviated as OCR has become a very import aspect of industry, as businesses have been ending towards automation in recent times. OCR is the process by which the the computer identifies and understand the words that are present in an image or a scanned document.

Let us consider the application in Figure 1. While the focus of the application is to perform translation between two languages, it is enhanced through the use of OCR, such that the users can get the translation merely by viewing the image through their camera. In today’s post we will be performing OCR using PyTesseract Library.

Installing Tesseract

Before proceeding with the implementation, we must first make sure that our systems have Tesseract installed. The instructions discussed in this post are based on Windows OS however you can find the equivalent instructions for other OS on the internet.

Apart from installing the PyTesseract Library, we also require the Tesseract Engine in our system. For windows the installer is available here. Once the engine is installed, make sure that eng.traineddata is available in the tessdata folder. This file acts as the trained data for identifying words in English Language. Similarly, trained data for various files is available here. For the execution of today’s code you will require the trained data files for Hindi and Tamil.

Finally, we must make sure that PyTesseract Library is available in our python environment. If not already installed, you can do so using the below command

pip install pytesseract

PyTesseract Implementation

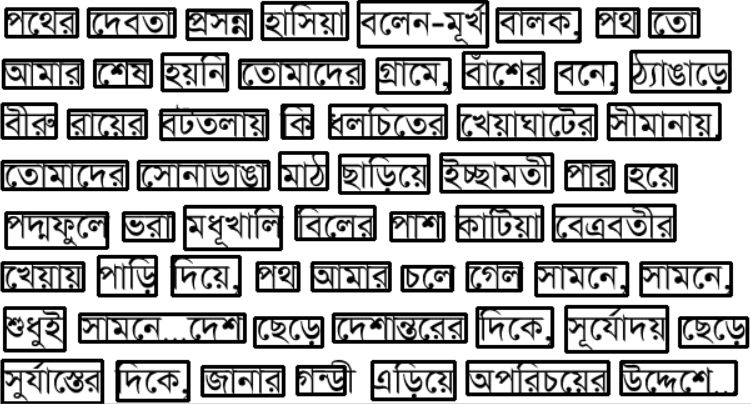

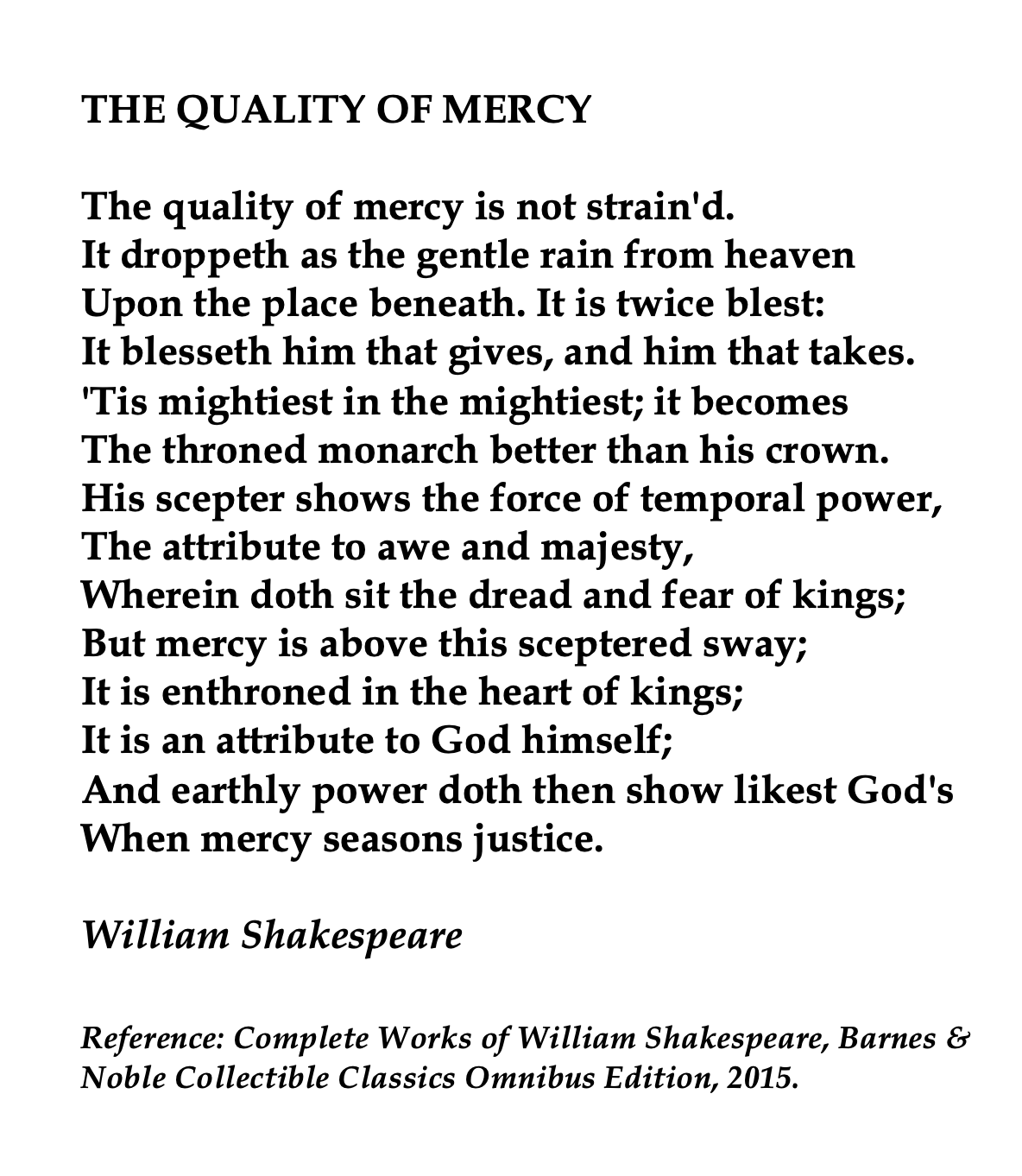

Let us consider the image in Figure 2. It contains a famous poem of William Shakespeare, however it is not possible to process the text present in it. This is where OCR comes in. Our objective is to identify the words present in this image and will be doing that using the pre-trained tesseract engine.

Firstly, we must import the necessary libraries. We will be using OpenCV library to process the image and then PyTesseract to identify the characters present in the image. We will be also needing Pandas and Numpy libraries to process the information retrieved using PyTesseract. Finally, we will need to mention the location of our tesseract engine so that our python environment knows where to find Tesseract Engine and avoid TesseractNotFoundError (For Windows, Path may vary).

import cv2

import pytesseract

import pandas as pd

import numpy as np

pytesseract.pytesseract.tesseract_cmd = r'C:\Program Files\Tesseract-OCR\tesseract.exe'

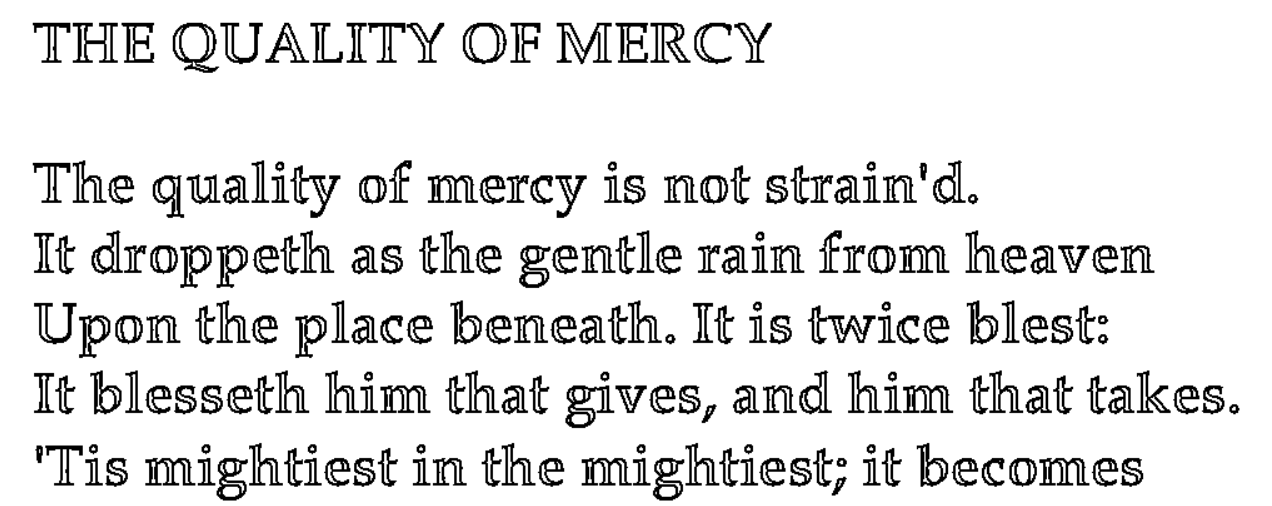

Next we need to input the image and preprocess it. We are going to input the image as grayscale and apply Adaptive Gaussian Threshold to distinguish between the background and foreground. The adaptive gaussian threshold uses the weighted sum of neighbourhood values where weights are a gaussian window.

filepath = input("Enter the filepath: ")

filename = input("Enter the filename: ")

image = cv2.imread(filepath+filename,0)

input_img = cv2.adaptiveThreshold(image,255,cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY,5,5)

cv2.imshow('Adaptive Gaussian Threshold Image', input_img)

cv2.waitKey(0)

cv2.destroyAllWindows()

Now we will use the tesseract engine to identify the words present in our input image. Tesseract can work on various configurations which is determined based on the use case. In the below code, we are setting the following configurations:

- OEM (OCR Engine Mode) as default (value of 3)

- PSM (Page Segmentation Mode) as 1 (Automatic page segmentation with OSD).

- Language as English After this, we are removing all the predictions that do not contain any words in them (Spaces or empty strings).

df = pytesseract.image_to_data(input_img, output_type="data.frame",config='--psm 1 --oem 3',lang='eng')

df = df[df.conf != -1]

df = df.replace(r'^\s*$', np.nan, regex=True).dropna()

details = df.to_dict()

print(details.keys())

print(details['text'])

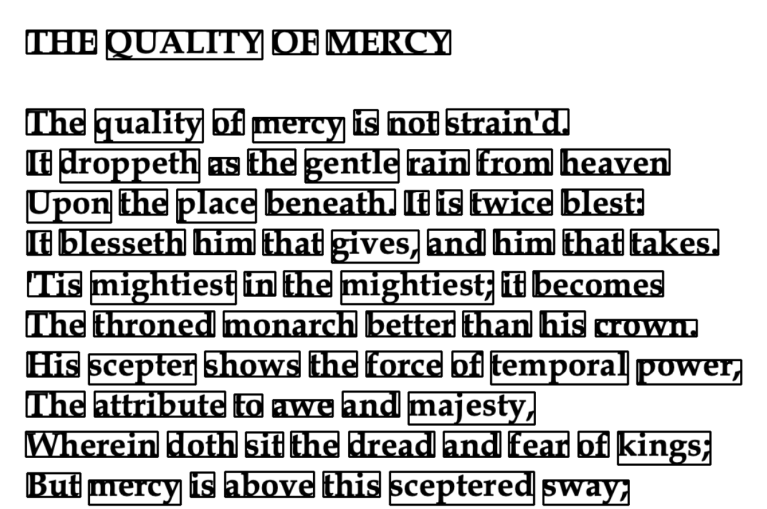

Finally, we will draw bounding boxes around the various words that we identified in the given image. We can also retrieve the words from the ‘text’ column in our output dataframe.

sequence = details['text'].keys()

for key in sequence:

if int(details['conf'][key]) >20:

(x, y, w, h) = (details['left'][key], details['top'][key], details['width'][key], details['height'][key])

img = cv2.rectangle(image, (x, y), (x + w, y + h), (0, 0, 255), 2)

cv2.imshow('captured text', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

Conclusion

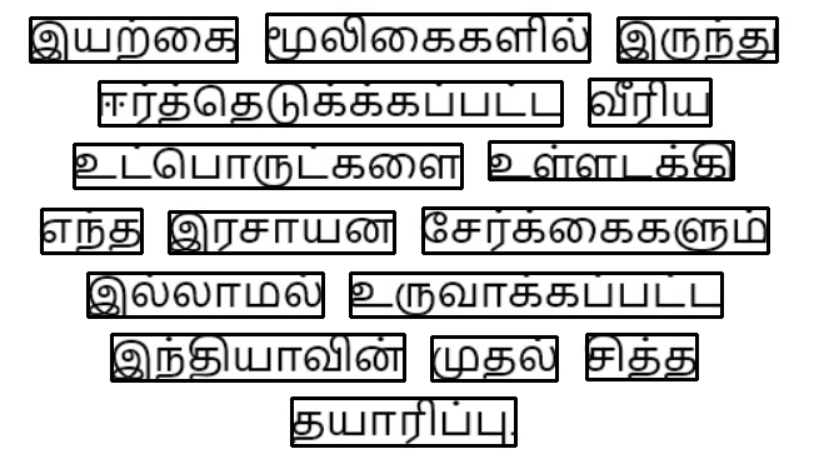

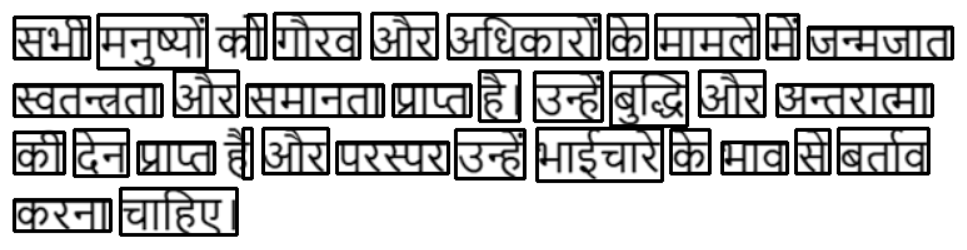

In today’s post we discussed the PyTesseract Library and how it can be used to perform OCR Operations on Images. We can also perform similar operations on various languages facilitated by Tesseract Engine. Below are two such examples in Hindi and Tamil.