Generating text using CharRNN

In the last week’s blogpost we discussed about Recurrent Neural Networks and saw how it can be used in tweet classification. In today’s post we are going to continue with RNNs and look at how a simple architecture can generate texts.

CharRNN stands for character RNN, where the network is used to predict the next character given a sequence of characters preceding it. This methodology can be used to generate texts given a starting sequence. However one of the major flow regarding this method is that as we are predicting one character at a time, it is possible to generate words that have no meanings.

We will be used Shakespeare’s sonnets as our corpus to train our network. We have all the sonnets in a text file as shown in the below figure. Our first objective is to extract this text from the file and preprocess it, by removing the punctuations and changing all characters to lowercase.

Implementation

In the below code, we are going to remove the punctuations and extract the sonnet and convert them into sequence of characters.

import re

import numpy as np

f = open('sonnets.txt')

text = f.read().lower().replace('\t','')

text = re.sub(r'[^\w\s]', '', text)

text = re.sub(r"sonnet.*\n", 'newpoem\n', text)

vocab = list(set(text))

text = [i for i in text.split("\n") if i!=""]

text.pop(0)

sonnets = []

temp = ""

for i in text:

if i != "newpoem":

temp += i+" \n"

else:

sonnets.append(temp)

temp = ""

Next we will be creating two dictionaries, one to convert a given number/index to character and other doing the opposite. Apart from this we will be defining vocab_size variable to store the size of our vocabulary, which is 28 in this case.

i2c_dict = {}

c2i_dict = {}

for i in range(len(vocab)):

i2c_dict[i] = vocab[i]

c2i_dict[vocab[i]] = i

vocab_size = len(vocab)

Using the character to index dictionary we had defined above, we are going to now encode the sequence of characters we had extracted from the sonnets into numbers. We are also going to define X and Y which we will use for training and testing our model. Our X is going to be a sequence of 50 characters (represented as numbers) and Y is going to be the character that comes right after the 50 characters. The output is going to be one-hot encoded so that we can treat this a multi-class classification problem.

def one_hot(a,vocab_size):

a = np.array(a)

b = np.zeros((a.size, vocab_size))

b[np.arange(a.size),a] = 1

return b

X = []

Y = []

counter = 1

for i in sonnets:

print("\r"+str(counter)+"/154",end="")

counter = counter + 1

for j in range(50,len(i)):

x_val = [c2i_dict[k] for k in i[j-50:j]]

y_val = one_hot([c2i_dict[i[j]]],vocab_size)

X.append(x_val)

Y.append(y_val)

X_array = np.array(X).reshape(len(X),50,1)

Y_array = np.array(Y).reshape(len(Y),vocab_size)

Let us now split the X and Y arrays for training and testing.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X_array, Y_array, test_size=0.1, random_state=10)

We will now import the necessary functions from the Keras framework. We will be discussing the use of these functions later, when we use them.

from keras import Model

from keras.models import Sequential,load_model

from keras.layers import Dense, Dropout, LSTM, Embedding

from keras.callbacks import ModelCheckpoint, Callback

from keras.optimizers import Adam

Before defining our model, we are going to be defining a custom callback, named PredictCallback, which will predict the output for a given input at the end of each epoch. This will give us an idea on how well the model is performing at each epoch. We are going to be generating 50 characters using our model to understand the model’s performance better.

def nextinput(x,pred):

x = x[1:]

pred = np.argmax(pred)

x = np.append(x,pred)

return x,pred

def printarray(arr):

string = ""

for i in arr:

string = string + i2c_dict[i[0]]

return string

class PredictCallback(Callback):

def on_epoch_end(self, epoch, logs={}):

keys = list(logs.keys())

inp = X_test[10]

print(printarray(inp),end="")

X_Val = np.reshape(inp,(1,50,1))

for i in range(50):

pred = self.model.predict([X_Val],verbose = 0)

pred = np.reshape(pred,(28,1))

inp,pred = nextinput(inp,pred)

X_Val = np.reshape(inp,(1,50,1))

character = i2c_dict[pred]

print(character,end="")

print("\n")

Let us now define our model. We are going to first add an embedding layer which will embed each character into a vector of length 256. Followed by this we are going to have an LSTM layer to under the sequence better. Finally we are going to have a fully connected network to determine the output.

def create_model(inp_shape,batch_size,vocab_size):

model = Sequential()

model.add(Embedding(vocab_size,256,input_length=50))

model.add(LSTM(512, input_shape = (None,50,256), activation = 'relu'))

model.add(Dense(2048,activation='relu'))

model.add(Dropout(0.1))

model.add(Dense(2048,activation='relu'))

model.add(Dropout(0.1))

model.add(Dense(2048,activation='relu'))

model.add(Dropout(0.1))

model.add(Dense(vocab_size,activation='softmax'))

return model

BATCH_SIZE = 64

model = create_model(X_array[0].shape,BATCH_SIZE,vocab_size)

model.summary()

Next we will compile our model using Adam Optimizer and Categorical Crossentropy loss function.

opt = Adam(learning_rate=0.0005)

checkpoint = ModelCheckpoint(filepath='model.{epoch:02d}-{loss:.2f}.h5')

callbacks_list = [checkpoint,PredictCallback()]

model.compile(optimizer=opt, loss='categorical_crossentropy', metrics=['accuracy'])

It’s time to train our model. We are going to save the weights at the end of each epoch using ModelCheckpoint, while predicting the output in each epoch using the custom epoch that we had defined.

history = model.fit(x=X_train,y=y_train,epochs=20,validation_split=0.1,batch_size=BATCH_SIZE,validation_steps=20,callbacks=callbacks_list,shuffle=True)

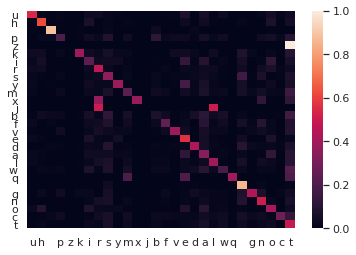

Once the model is trained (approximately takes 4 hours) we can use this model to predict the output for the testing data and compare it with the actual output. We can plot this as a confusion matrix to help in visualizing the output.

import seaborn as sns; sns.set_theme()

from sklearn.metrics import classification_report, confusion_matrix

y_pred = model.predict(X_test,verbose = 1)

y_pred_val = np.argmax(y_pred,axis=1)

y_test_val = np.argmax(y_test,axis=1)

cm = confusion_matrix(y_test_val, y_pred_val)

cm = (cm.T/cm.sum(axis=1)).T

sns.heatmap(cm,xticklabels=vocab, yticklabels=vocab)

Below is the confusion matrix for the model. However the performance of the model will vary a little due to stochastic nature of the algorithm.

The below is the output generated using our model, given the first 50 characters. while the model is able to generate words well. However, the structure of sentence is not well defined and the theme of the poem is completely post. This is because with each letter we predict, we are replying on the output of the model to predict the next one.

You can find the code for this blogpost in this github repo.